IaC in my home lab

Earlier this year, I successfully established a manual Kubernetes lab featuring numerous applications running on a multi-node RKE2 Kubernetes cluster. This intricate setup was hosted on an ESXI Server, where I diligently configured multiple VMs by hand. However, residing in Texas, I encountered occasional power disruptions, and unfortunately, during one such incident, my ESXI server lost power, leading to the failure of the meticulously crafted cluster. The lack of comprehensive documentation hindered my ability to restore it promptly, leaving me disheartened and discouraged at the time.

Now, with renewed determination, I am embarking on a journey to recreate the cluster, ensuring a more resilient and well-documented infrastructure that aligns with my initial vision and safeguards against potential setbacks. By revisiting this endeavor, I aim to set it up properly, implementing best practices and capturing every essential step for future reference.

I invite you to join me as I navigate through the complexities of setting up the Kubernetes cluster, learning and adapting along the way. Together, we will witness the transformation of this project into a robust and well-orchestrated system.

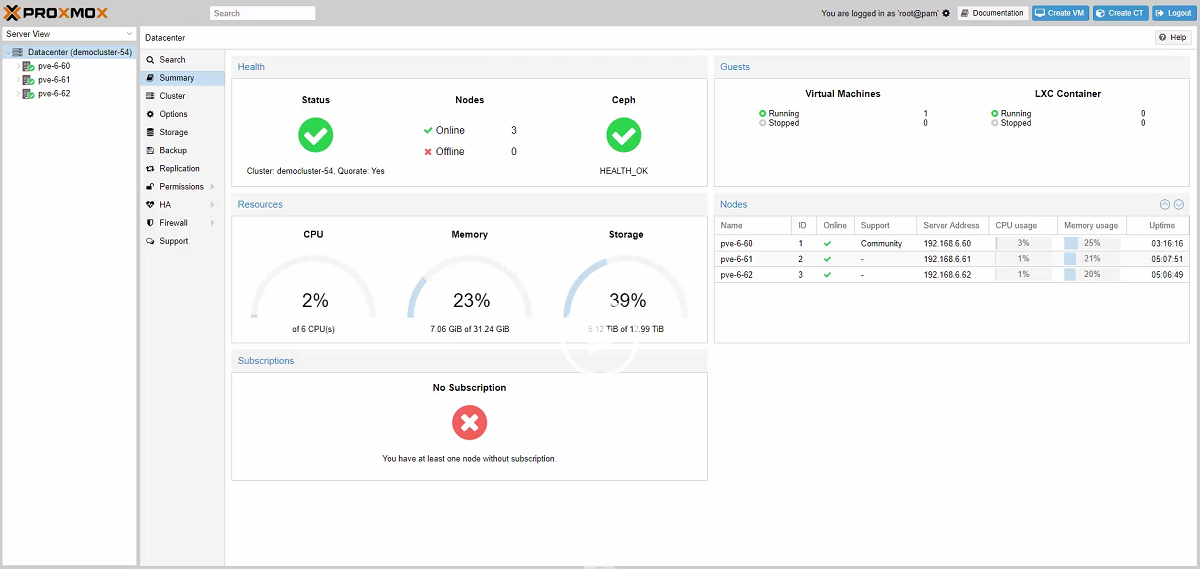

In this first post I will focus on how I go about setting up Infrastructure as Code in my lab. Previously I used ESXI to run my VM's on I now have switched to PROXMOX so I do not have to pay for a VMWARE License. For my code repository I will be using public Gitlab. For IaC in my lab I will use terraform.

All files for this post can be found at iac_in_my_home_lab

PROXMOX Server

First you will need to set up a PROXMOX server according to PROXMOX documentation here: PROXMOX Documentation Link

PROXMOX Cloud-Init Template

Next we will need to create a cloud-init template to deploy our images in PROXMOX from terraform.

To create a cloud-init template we will first need to ssh or shell into the server running proxmox and run a few commands.

# download the image

wget https://cloud-images.ubuntu.com/bionic/current/bionic-server-cloudimg-amd64.img

# create a new VM with VirtIO SCSI controller

qm create 9000 --memory 2048 --net0 virtio,bridge=vmbr0 --scsihw virtio-scsi-pci

# import the downloaded disk to the local-lvm storage, attaching it as a SCSI drive

qm set 9000 --scsi0 local-lvm:0,import-from=/path/to/bionic-server-cloudimg-amd64.img

The next step is to configure a CD-ROM drive, which will be used to pass the Cloud-Init data to the VM.

qm set 9000 --ide2 local-lvm:cloudinit

To be able to boot directly from the Cloud-Init image, set the boot parameter to order=scsi0 to restrict BIOS to boot from this disk only. This will speed up booting, because VM BIOS skips the testing for a bootable CD-ROM.

qm set 9000 --boot order=scsi0

For many Cloud-Init images, it is required to configure a serial console and use it as a display. If the configuration doesn’t work for a given image however, switch back to the default display instead.

qm set 9000 --serial0 socket --vga serial0

In a last step, it is helpful to convert the VM into a template. From this template you can then quickly create linked clones. The deployment from VM templates is much faster than creating a full clone (copy).

qm template 9000

After running these commands you should see a template within the proxmox gui named 9000.

We now have a template that we can take and deploy with our terraform.

Build out the terraform

In order to use terraform as Infrastructure as Code we must first install it on our system.

Please follow the linked documentation to install the terraform on the host you will be executing code on.

Terraform code

In order to run our terraform we will need to create a few files and change a few variables in the variables.tf file.

Create 3 blank files named:

- version.tf

- main.tf

- variables.tf

Place these files in a empty directory you create. From this point on I will refer to this as the Terraform Directory

Copy and paste the code below in the files you created above.

version.tf

terraform {

required_providers {

proxmox = {

source = "telmate/proxmox"

version = "2.9.11"

}

}

required_version = ">= 0.13"

}

provider "proxmox" {

pm_api_url = "${var.proxmox_api_url}"

pm_user = "${var.proxmox_username}"

pm_password = "${var.proxmox_password}"

pm_tls_insecure = true

pm_debug = true

}

In the version.tf file above we define the provider that terraform will use to deploy our infrastructure when we run our terraform.

main.tf

resource "proxmox_vm_qemu" "vm-clone-1" {

name = "vm-clone-1"

count = 1

target_node = "${var.proxmox_target_node}"

full_clone = true

cores = 2

sockets = 1

memory = 2048

clone = "${var.template_name}"

sshkeys = "${var.host_public_ssh_key}"

ipconfig0 = "ip=${var.vm_ip_address}/24,gw=${var.vm_gw_address}"

boot = "order=scsi0"

ciuser = "${var.vm_username}"

cipassword = "${var.vm_password}"

network {

model = "virtio"

bridge = "vmbr0"

}

}

In the main.tf file we define vm-clone-1 object our terraform provider will create in our proxmox server.

variables.tf

variable "host_public_ssh_key" {

default = ""

}

variable "proxmox_target_node" {

default = "pve"

}

variable "template_name" {

default = "VM 9000"

}

variable "vm_ip_address" {

default = ""

}

variable "vm_gw_address" {

default = ""

}

variable "vm_username" {

default = "ubuntu"

}

variable "vm_password" {

default = "p@ssword123!"

}

variable "proxmox_username" {

default = ""

}

variable "proxmox_password" {

default = ""

}

variable "proxmox_api_url" {

default = ""

}

In this variables.tf file we define the variables we used in our main.tf and version.tf files. In the next section I will explain each of the variables in variables.tf.

Variables

host_public_ssh_key : In order for us to ssh to the vm we deploy we have to define our public ssh key. This is for the machine that you will be ssh'ing from to be clear. the default locations for this key are listed below.

Windows : C:\Users

Linux : ~/.ssh/id_rsa.pub

Mac : ~/.ssh/id_rsa.pub

proxmox_target_node : The proxmox target node is the name of the proxmox server you created. The default value for this is pve.

template_name : The template name is the name of the template our terraform will be deploying.

If you followed my commands earlier your template name should be "9000"

vm_username : The VM username is the username your vm requires when you log into it.

vm_password : The VM password is the password your vm requires when you log into it.

proxmox_username : The proxmox username is the username to login to your proxmox server. Proxmox has 2 different authentication methods called Linux PAM standard authentication, and Proxmox VE authentication server.

If you are using Proxmox VE authentication server your user will be in the format of "user@pve"

If you are using Linux PAM standard authentication your user will be in the format of "user@pam"

If you want to use the root user you will need to use "root@pam" for this variable

proxmox_password : The proxmox password is the password you set to login to your proxmox server.

vm_ip_address : The vm ip address is the ip address you want your deployed vm to have.

vm_gw_address : The vm gw address is the ip address of your networks default gateway.

proxmox_api_url : The proxmox api url is the url of proxmox server api.

Example format: https://(ProxmoxIP):8006/api2/json

Running the terraform

In the Terraform directory we first want to initialize the terraform directory. This will install all the providers terraform needs to execute our code as well as ensure its set up correctly to function in your directory.

*Execute the below command in the terraform directory: *

terraform init

After we do a "terraform init" we will do a terraform plan. By executing a terraform plan it will show us all the changes that will be made if we run a terraform apply.

*Execute the below command in the terraform directory: *

terraform plan

After running a terraform plan we will need to execute the terraform apply. The terraform apply is the command that will create the vm within our proxmox server.

*Execute the below command in the terraform directory: *

terraform apply

At the end of this lab if you would like to destroy your VM you can do so with the following command.

*Execute the below command in the terraform directory: *

terraform destroy

Conclusion

*At the end of this you should have a working model of how to use Infrastructure as code in your home lab. In future posts I will talk about how to create a pipeline in Gitlab to deploy Kubernetes nodes on your proxmox server and how to deploy Kubernetes on those nodes in an automated fashion managed in gitlab. *